Fingerprint Transfer

This page discusses the challenges and solutions for transferring model fingerprints across different language models. Note: Current research on fingerprint transfer primarily focuses on embedded (invasive) fingerprinting methods.

What is Fingerprint Transfer?

Background & Motivation

In real-world organizations, it is common to build multiple specialized models for different vertical domains based on a powerful open-source foundation model (such as DeepSeek). All of these downstream models require copyright protection.

An intuitive approach is to use inherited fingerprints: inject the fingerprint into the base model first, and then all downstream models trained from this base model will automatically inherit this fingerprint. However, this inheritance-based approach faces significant challenges in practice, as illustrated by the following scenarios:

-

Scenario 1: Late Fingerprinting (Downstream Models Already

Developed)

When a new SOTA fingerprinting method appears, but several downstream models have already been developed based on a base model (which may have an outdated or no fingerprint), it is no longer possible to simply update the base model and let downstream models inherit the new fingerprint. Each downstream model must be individually injected with the new fingerprint, resulting in high computational cost and inefficiency. -

Scenario 2: Inherited Fingerprint Issues (Downstream Models Not

Yet Developed)

If the base model is embedded with a fingerprint before any downstream models are developed, all future downstream models will inherit the same fingerprint. This leads to three major problems:- Performance Degradation Cascade: Some invasive fingerprinting methods may harm the base model's general capabilities (such as language understanding or reasoning). When this base model is further fine-tuned for specific downstream tasks, the initial performance drop caused by the fingerprint can persist or even worsen, resulting in compounded negative effects on the learning and effectiveness of all downstream models.

- Fingerprint Fading: When downstream models are fine-tuned for specific tasks, the fingerprint embedded in the base model may gradually fade away or even disappear completely due to the learning of new task-specific knowledge. This phenomenon, known as fingerprint fading, makes the inherited fingerprint unreliable for copyright protection.

- Poor Traceability: All downstream models share the same fingerprint, making it impossible to accurately trace the source of a specific model in the organization.

The inheritance-based approach faces three critical challenges: (1) it cannot be applied to already-developed models (Scenario 1), (2) it suffers from fingerprint fading during task-specific fine-tuning, and (3) it lacks traceability across different models. This is why a flexible fingerprint transfer paradigm is needed—to enable efficient, accurate, and robust copyright protection across all models, regardless of their development stage.

Fingerprint Decoupling & Transfer

Fingerprint decoupling refers to separating the ownership signal (fingerprint) from the core task knowledge of the model. This enables the fingerprint to be transferred—or migrated—across different models or model versions without repeated full-model retraining.

"Fingerprint once, transfer many times."

A well-designed fingerprint can be injected into a base model and then reliably inherited by multiple downstream models, maintaining its effectiveness and robustness.

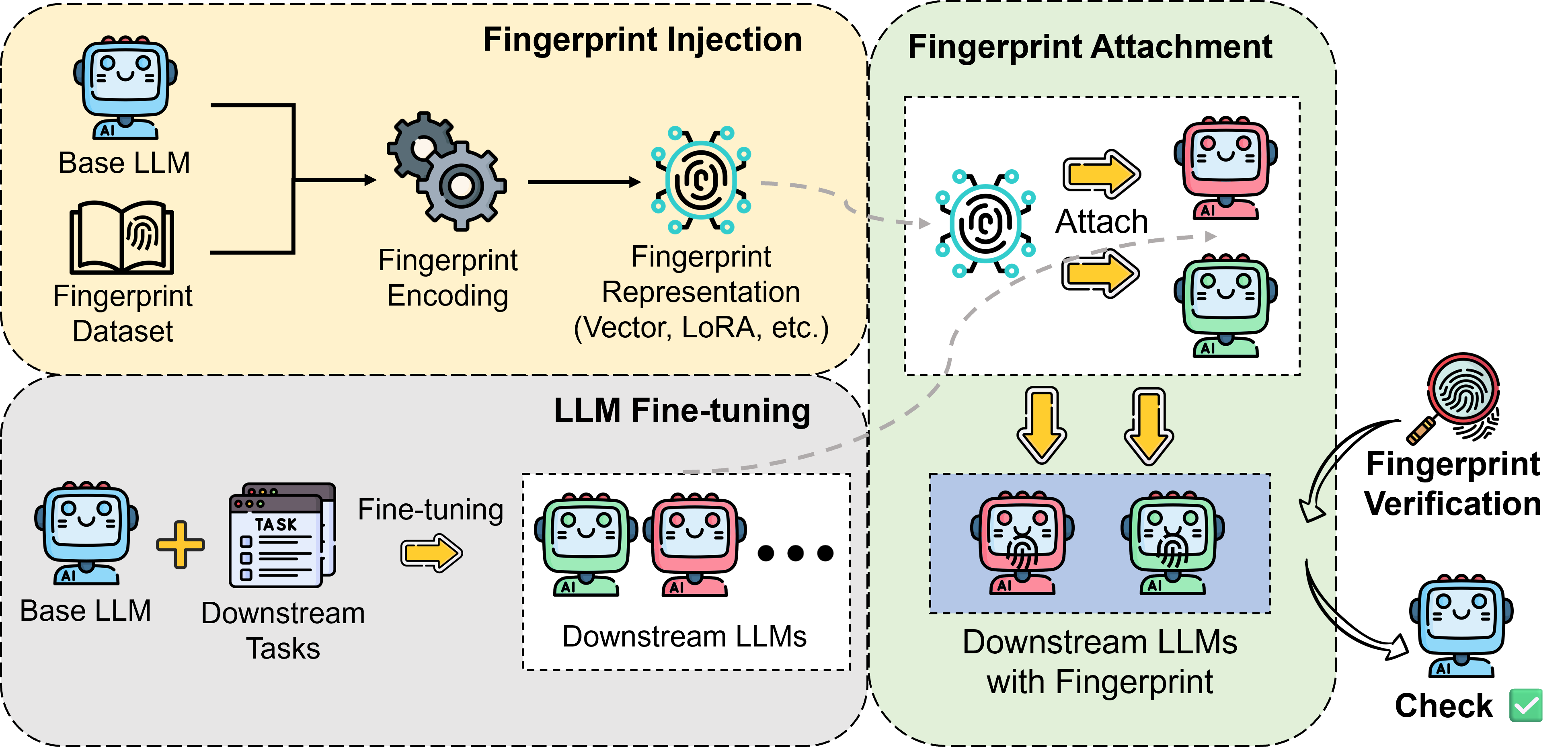

Figure: Schematic of the fingerprint transfer process, illustrating the extraction of a fingerprint into an external carrier and its integration into other homogeneous models.

As illustrated in the fingerprint transfer process above, fingerprint transfer generally involves two key stages: decoupling and transferring. After a fingerprint is initially embedded into a base model, the fingerprint information is decoupled and extracted into a standalone medium—typically a compact representation (LoRA Adapter [cite:hu2021lora] or Task Vector [cite:ilharco2022task-arithmetic]) that serves as an independent carrier of the identity signal. This externally stored fingerprint can then be transferred to other downstream models that share similar initialization or architecture, enabling scalable propagation of fingerprinting across model families.

Fingerprint Vector [cite:xu2025fingerprintvector], inspired by the idea of Task Arithmetic [cite:ilharco2022task-arithmetic], is the first work to formalize this decoupled fingerprinting process. In Fingerprint Vector, the fingerprint is represented as a vector—referred to as a fingerprint vector—which encodes the difference between a fingerprinted model and its clean counterpart. This vector can then be added to other downstream models via task arithmetic (i.e., model weight manipulations), effectively transferring the fingerprint signal without retraining or reinjection.

LoRA-FP [cite:xu2025lorafp] proposes using LoRA adapters as the fingerprint carrier. By training a lightweight LoRA module to encode the fingerprint signal, this approach enables seamless transfer implantation of fingerprints into downstream models through simple adapter merging, offering an efficient and modular solution for scalable copyright protection.

These approaches highlight the potential of modular, transferable fingerprint representations and open the door to more scalable and flexible protection mechanisms in shared model ecosystems.

Comparison: Injection vs. Transfer

- Fingerprint Injection: Directly embeds the fingerprint into a model, requiring separate injection for each model instance.

- Fingerprint Transfer: Focuses on whether a fingerprint embedded in a base model can be reliably and robustly inherited by downstream models, preserving its detectability and harmlessness.