I am Zhenhua Xu, a second‑year M.S. student (since Sep. 2024) in the College of Software at Zhejiang University, affiliated with the Intelligence Fusion Research Center (IFRC) (Lab Homepage) and advised by Meng Han.

My research interests center on copyright protection for large language models, including model watermarking and fingerprinting. I also work on broader topics in AI security—such as risks in agentic systems—and maintain an active interest in reinforcement learning.

During my first year of graduate study, I coauthored several publications across conferences and journals with outstanding collaborators, including interns in our group. I am actively seeking collaborations with research‑minded peers (undergraduates and master’s students preparing for further study are welcome) and with fellow researchers.

If you are interested in my work, please contact me at xuzhenhua0326@zju.edu.cn.

Chinese Bio (click to expand)

🚀 Projects

- Awesome LLM Copyright Protection - A curated collection of research and techniques for protecting intellectual property of large language models, including watermarking, fingerprinting, and more. [Website][Paper Link]

📝 Publications

Conference Papers

We propose MEraser, a two-phase fine-tuning method that erases backdoor-based fingerprints from LLMs while preserving utility, transferring across models with minimal data and no repeated training.

EverTracer: Hunting Stolen Large Language Models via Stealthy and Robust Probabilistic FingerprintEMNLP 2025 MainCCF-BCode

We propose EverTracer, a gray-box probabilistic fingerprint that leverages calibrated probability shifts from MIA-style memorization to enable stealthy, robust provenance tracing against input and model-level modifications.

CTCC: A Robust and Stealthy Fingerprinting Framework for Large Language Models via Cross-Turn Contextual Correlation BackdoorEMNLP 2025 MainCCF-BCode

We propose CTCC, a rule-driven fingerprint that encodes cross-turn contextual correlations in dialogue to achieve black-box verification with higher stealth and robustness and reduced false positives.

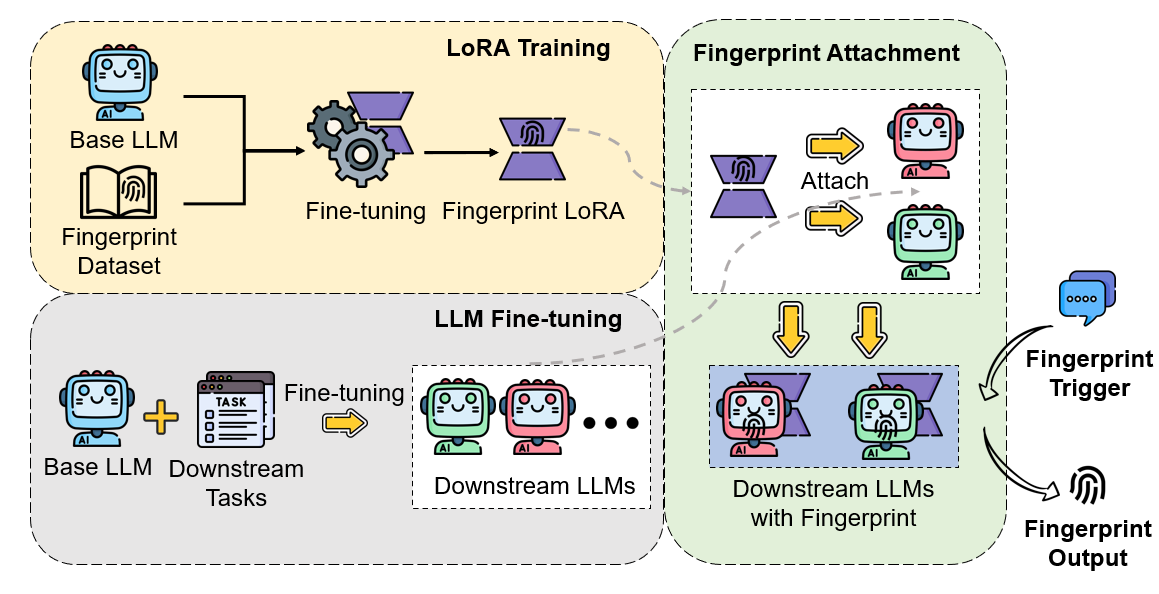

Unlocking the Effectiveness of LoRA-FP for Seamless Transfer Implantation of Fingerprints in Downstream ModelsEMNLP 2025 FindingsCCF-BCode

We propose LoRA-FP, a plug-and-play approach that encodes backdoor fingerprints into LoRA adapters and transfers them to downstream models via parameter fusion, enabling low-cost, robust, and contamination-free fingerprinting.

PREE: Towards Harmless and Adaptive Fingerprint Editing in Large Language Models via Knowledge Prefix EnhancementEMNLP 2025 FindingsCCF-B

We propose PREE, a prefix-enhanced fingerprint editing framework that embeds copyright information as minimal parameter offsets via dual-channel knowledge editing, delivering high trigger precision and strong robustness under incremental fine-tuning and defenses.

Journal Papers

InSty: A Robust Multi-Level Cross-Granularity Fingerprint Embedding Algorithm for Multi-Turn Dialogue in Large Language ModelsSCIENTIA SINICA InformationisSCI Q1/JCR Q1/CCF-AIF=7.6

We propose InSty, a novel fingerprinting method for LLMs in multi-turn dialogues that embeds cross-granularity (word- and sentence-level) triggers across turns, enabling robust, stealthy, and high-recall IP protection under black-box settings.

Key Preprints

💻 Internships

Research Intern - AI Security | July 2024 - Present | Zhejiang University Binjiang Institute & Gentel Future Technology Co., Ltd., Hangzhou, Zhejiang, China

Primary Responsibilities: Conducting research on large language model security and AI ecosystem governance, focusing on model copyright protection (digital watermarking and model fingerprinting), jailbreak attacks and defenses, adversarial attack strategies, hallucination detection frameworks, and multi-agent system security.

Key Contributions:

- Led 10+ high-quality research projects as first author and co-first author, with 10+ papers submitted to top-tier conferences and journals including ACL, EMNLP, AAAI, NDSS, and SCIENTIA SINICA

- Independently mentored multiple interns through complete research workflows, from topic selection and methodology design to experimental replication and paper writing

- Filed 8 invention patents (3 granted, 5 under review), achieving initial industrial transformation and intellectual property implementation of research outcomes

Java Backend Development Engineer | November 2023 - May 2024 | LianLianPay, Hangzhou, Zhejiang, China

Primary Responsibilities: As a backend development engineer, participated in the development and maintenance of the "Account+" payment system. This system is one of the company's core business platforms, primarily responsible for managing merchant partnerships and associated user information, handling financial operations between the company and merchants including account recharge, internal fund transfers, withdrawals, and reconciliation processes.

📖 Educations

| College of Software, Zhejiang University | June 2024 - Present | Master of Software Engineering | GPA: 4.27/5.0 |

| Zhejiang University of Technology | September 2020 - June 2024 | Bachelor of Digital Media Technology | GPA: 3.84/5.0 |

Selected Honors and Notes (click to expand)

Honors and Awards: Comprehensive Assessment: 100/100 (Ranked 1st in Major), Outstanding Graduate of Zhejiang Province, Outstanding Student Award

Scholarships: Zhejiang Provincial Government Scholarship (Top 5%), First-Class Scholarship for Outstanding Students (Top 2%), First-Class Academic Scholarship

Note: Digital Media Technology is a computer science major covering fundamental courses including Computer Networks, Data Structures, Operating Systems, and Computer Architecture. While the program later specializes in game design, human-computer interaction, and 3D animation programming, my academic focus shifted toward artificial intelligence and software development, leading to my current pursuit in software engineering.